We Ran 11 AI PR Bots in Production

Most AI PR bots are slop machines. I ran 11 in a real production repo and ranked them. Here's which actually work and a suggested "review stack" for each budget.

Most AI PR bots are slop machines. I ran 11 in a real production repo, here's which actually work and a suggested "review stack" for each developer/team profile - The Broke, The Budget Conscious and The Kingly Spender.

Below are my final power rankings and discuss the rationale for each score. Methodology, raw scores & disclaimers at the end.

1. Gemini Code Assist - Score 12.5

Best combination of intelligence, speed and price.

Gemini wins by combining intelligence, devx, cost and speed. It ranked second in intelligence, the most important scoring category (behind only Cursor Bugbot). It provides inline fixes, is always first to leave reviews, labels comments with a severity (low/medium/high) and allows filtering on severity. The best part - it's free (at least while subsidies last and training data is valuable).

Gemini can sometimes be too verbose but it's the only bot I've seen that does well across the full range of comment severity, from nitpicks to critical errors. I'd recommend that anyone who can do so (i.e. can give away their data) add Gemini to their review cycle.

2. Cursor Bugbot - Score 11.05

The smartest.

Cursor's Bugbot is a clear cut above in the "quality" of critical issues it captures. Gemini ranks higher only by being more well-rounded and affordable (read: free) while Cursor BugBot finds deeper, more impactful bugs. It does so by understanding not just what is being changed in the codebase but what the likely intent was and using that to flag likely issues. BugBot lacks configurability but that isn't needed, it just works. Despite my ongoing beef with Cursor (read here) it deserves a place in the stack of anyone who can afford the cost.

3. Cubic - Score 10.15

Up and Coming.

Third place comes with a meaningful drop in topline score, however Cubic is run by a rapidly iterating team and is quickly differentiating in terms of offering. Its intelligence has grown meaningfully in the short time we've been users, sometimes catching issues the others would not. Cubic's focus on understanding users and shipping quickly makes it a clear winner in the devx category (perfect 10) and I expect further differentiation as they add more features, iterate and find winning features. We were early users of their "codebase scan" feature which is unique among AI review bots (brings in elements similar to static analysis tools) and have taken advantage of the Linear + Cursor integrations.

4. OpenAI Codex - Score 8.6

Smart, but broken now.

I'm ultimately disappointed that this ended up ranking so highly; Codex's reviewer simply stopped running for us some number of weeks ago and still isn't working as expected (hence a -1 in devx), however when this was running it was near the top in terms of intelligence and that alone puts it one slot off a top-three finish. Being bundled for no extra cost with ChatGPT pro subscription is a plus.

5. Greptile - Score 8.55

Solid, but losing ground.

Greptile is the only tool that seems to have regressed as we used it. I was bullish enough to subscribe post-trail, then canceled our subscription after one month. The devx is good and offers plenty of configurability, however intelligence seemingly took a step backward between when we started and stopped subscribing. If we weren't using other bots, Greptile would add value. But outside of a vacuum it isn't bringing any dimension of comprehensiveness, configurability and intelligence that isn't better captured with another (more affordable) tool.

6. Ellipsis - Score 8.05

Not worth the time.

Basically Greptile but cheaper in both cost and quality.

7. Diamond (Graphite) - Score 7.45

Smart, slow, buggy and expensive.

Similarly to Codex we haven't been able to get this to review our PRs anymore (despite contacting support), earning the second -1 in devx of my rankings. Otherwise the intelligence is good, but it's slow and expensive. As an aside, I am a fan of Graphite as a code review GUI. It does many of the things that github's GUI should be doing - making comments easy to track and resolve, offering integrated AI chat, easily switching between revisions. Sad to hear that they were acquired by Cursor though, quality and reliability has seemingly already dropped since then and now I might end up with a second billing beef.

8. Qodo - Score 6.65

Don't bother.

We're in won't-try-again territory. Qodo was hallucinating quite often and it lacks configurability to do anything about the noise. It is relatively affordable though.

9. Copilot - Score 5.9

Just a rules engine.

Despite ranking near the bottom (based primarily on the intelligence aspect) we use Copilot and get real value out of it. However, it serves a role in our stack closer to a linter or static analysis tool than AI review. We use it to enforce custom styling rules and simple best practices. Why? 1. It has a horrible intelligence level and 2. In order to actually get Copilot reviews a subscriber must explicitly request review. To be useful to us I expect review bots to run automatically, without a button click from a user. Copilot doesn't allow that. But reviews come free with a Copilot subscription, configurability is solid (create a yaml with the rules you want in your repo) and it does a great job in its narrow lane of enforcing style guidelines.

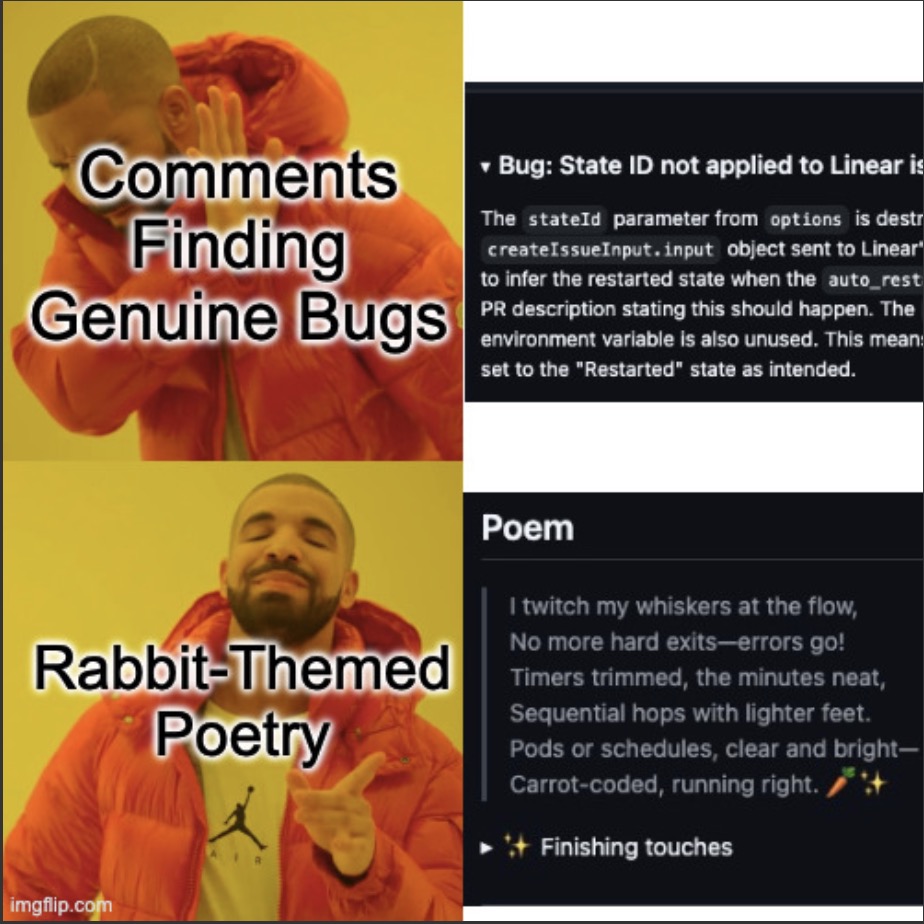

10. CodeRabbit - Score 5.5

Worse than nothing.

This was the first tool we tried and one that I've seen advertised quite heavily. Unfortunately the rabbit suffers from a toxic combo of high hallucination rate and verbosity which makes it not only neutral but a net negative, even in a vacuum. It has an intelligence ranking low enough that the high comprehensiveness score serves as a net negative. The devx was also poor; too many options, a confusing layout and multiple useless features adding unnecessary complexity. The most notable feature was that I could automatically add some AI slop rabbit-themed poetry to my PR.

The cost must be higher to cover the marketing spend. I also remember reading they had a critical security vulnerability after we revoked repo access/suspended the installation (link here).

Unranked: Claude Code*

Claude Code has never been used in our repo so I can't score it. The reason is twofold:

- Setup takes more work than any other tool here & needs to be done in github actions.

- Cost is usage-based, which adds unpredictability and a constant consideration of cost in the back of your mind that can make you hesitant to push.

Claude could be good in some circumstances but I'd rather have a fixed ceiling than a lower floor. I did recently write this prepush hook (simple gist) that runs Claude Code's review and appends a comment with the result to prs, though it is TBD on whether we keep this running.

Recommendations by Budget

With all that said, here's what we actually run and my recommendations based on budget.

Our Stack

Our current "stack": Gemini, Cursor Bugbot, Copilot, claude code CLI hook, Greptile*

*On a 3 month promo they are offering, don't think we'll continue with it after the trial expires.

The Broke

Gemini + anything you might get for free (copilot, codex). Then run free trials on other tools until you are either no longer broke, no longer have tools to try out or no longer have code to write :).

The Budget Conscious

Gemini + Cursor BugBot. If you're budget conscious you'll get the most mileage per dollar with a simple one-two. Cursor's bug bot will catch critical, hard to find bugs while Gemini covers most of the rest and can be tuned for sensitivity. You aren't going to be able to configure repo-specific rules like with other tools (copilot, greptile etc.) but those can be solved with a linter or style guide anyways. What you should care about is bugs and these tools will get most of them for you.

The Kingly Spender

Gemini + Cursor BugBot + Copilot + Codex + Diamond (Graphite) + Cubic. This means everything except Ellipsis, Claude Code, Greptile, CodeRabbit and Qodo. Why? Because no single bot really seems to have a monopoly on catching all bugs. There are some that consistently add more value and others that will mostly be repeating what another has already caught; but if cost is not a concern (as it isn't for royalty) then catch it all. The only asterisk I'll add is that you'll lose time if you don't have a tool for deduplicating the repeated comments as it can get super noisy. I'd also recommend adding some static analysis tools to capture a different dimension of bug, we use SonarQube + Snyk.

Methodology & Disclaimers

Some disclaimers:

- Trials were run at different times, many may have changed since I was a user.

- We run automatic reviews on prs ranging from trivially small (single line) to massive migrations (entire repo). Any exclusion settings that were part of the tool defaults were used. No meaningful edits were made.

- Opinions are derived from a primarily pnpm/node/typescript/next.js stack, heavily backend. Also had some go, rust and python as well as helm charts/"IAC/yaml"

- Opinions are my own, scores are vibes based on relative experiences with each and score weightings are numbers I chose to have relative value

The score formula was:

Total Score =

(Intelligence × 0.8)

+ (Speed × 0.05)

+ (Comprehensiveness × 0.2)

+ (Configurability × 0.1)

+ (DevX × 0.2)

+ (Cost × 0.2)Raw Data:

| Name | Intelligence | Speed | Comprehensiveness | Configurability | Devx | Cost | Weighted Score |

|---|---|---|---|---|---|---|---|

| Copilot | 2 | 2 | 7 | 8 | 2 | 8 | 4.85 |

| Gemini | 8 | 10 | 9 | 2 | 8 | 10 | 11.15 |

| Codex | 7 | 6 | 5 | 1 | 1 | 8 | 8.05 |

| Qodo (Codium) | 3 | 5 | 7 | 8 | 4 | 5 | 5.6 |

| CodeRabbit | 1 | 6 | 8 | 8 | 5 | 5 | 4.3 |

| Greptile | 4 | 7 | 8 | 6 | 8 | 6 | 7.35 |

| Cursor BugBot | 10 | 5 | 6 | 1 | 8 | 4 | 11.05 |

| Diamond (Graphite) | 7 | 3 | 6 | 1 | 3 | 3 | 7.35 |

| Claude Code | 0 | 0 | 0 | 0 | 0 | 2 | 0.4 |

| Ellipsis | 4 | 7 | 7 | 5 | 5 | 8 | 7 |

| Cubic | 6 | 7 | 8 | 6 | 10 | 4 | 8.95 |